Information

Is it possible to formulate a truly generalized theory of Information?

The question is an open invitation to ask:

- Is information part of the Goal-of-Life equation?

- Is information part of the set of Natural Laws?

- Is the meaning of information physical or philosophical?

- Is it even possible to have one definition of information?

- What if it is not possible?

- What if the purpose of Information is just an axis on a dimensional space to which we don’t have access?

- Is information metamorphic, does it have a continuum, and does it belong in a spectrum?

This deposit of interrogation points serves no purpose as an index of exploration, but for future records, I’ll keep them as an inspiration section, and reccur to it if if I find myself in need of such during this endeavor.

Still in an introductory and relevant note of intention, it is important to state the following:

There is no ambition in my words to dissect all the lexicon-related interpretations or summarize previous publications. The goal of this note, and the ones that will follow, is to naively follow the threads and sprouts from the exploration itself. As part of these first attempts at independent research, the experimental nature of seeking first principles and training myself at refined thinking, proofing and argumentation, it is understandable that failure is a vertiginous eminence. I must remind myself that methodology without creativity is an arid field where nothing is found but drought and decay. Rain will only come if the intent is selfless, and the pursuit noble enough to transcend my flaws inspire collective wisdom, and contribute to all Life.

As a tooling system to approach the question at stake, I propose, while inviting whoever may see this open-research page to comment and contribute, the following: 1. Collect data 2. Sort data categorically 3. Experiement with visual abstraction using both Design and Lexicon approaches 3. 1 Examples will be sought across lexicon and academic domains 3. 2 Parallels will be tested visually in the time domain continuum 3. 3 Return to step 2. if necessary

* Then plotting de abstractive objects in the time domain for sequential overview

Why is it useful to question something so robust as the current definitions and the distinction between Information Theories?

Are there possibilities to further stretch it and find new fittings?

If the discovery of information is proven, how could it change our understanding of reality? Could misinformation become a crime? If we are not the true destination of information, what else is in the realm of Recipient/Observer, and qualifies as such? Even if nothing of value comes out of this, the model will not suffer when questioned, would only solidify its significance.

In my day to day I’m constantly reminded of Shannon’s Information Theory, and as starting point let’s take the axioms of the Mathematical Theory of Communication:

Building blocks of information

Symbols, connection - the relationship between them, symbolic relationship, information assembly theory, the distinction between man-made symbols and building blocks of Nature, even further, from a nonhuman perspective information generation/emergence.

For a Generalized Information theory x Assembly Theory, is needed:

- A generalized smallest unit of information

- Can it be / is it fundamental?

- Does meaning emerge?

- The abundance of symbols and their connections can be used for sampling regardless of context?

- Measurement coherence across the spectrum?

- Does the inter-contentedness of context-differing information spaces reveal anything interesting?

- Synergetic Information, the addition of measurements, predictions vs unpredictable emergence?

Mathematical Theory of Communication

The mathematical model of communication, where the definition of the amount of information temps any mind to extend its applications to the natural world. Attempts to generalize the model have counted so far 44 proposals, yet the meaning intended in the content of said information is left as a neglected artifact, and again function takes precedence over meaning. At this point the perfect excuse to not pursue any further in the exploration presents itself: Why would meaning be accounted for? After all, meaning is subjective, it cannot be estimated*.

# comment

# think which of the reasons and in what shape would be better to counter-argument.

# -> Also, rethink if this is indeed the best way to introduce the grand quest.1938, Claude Shannon

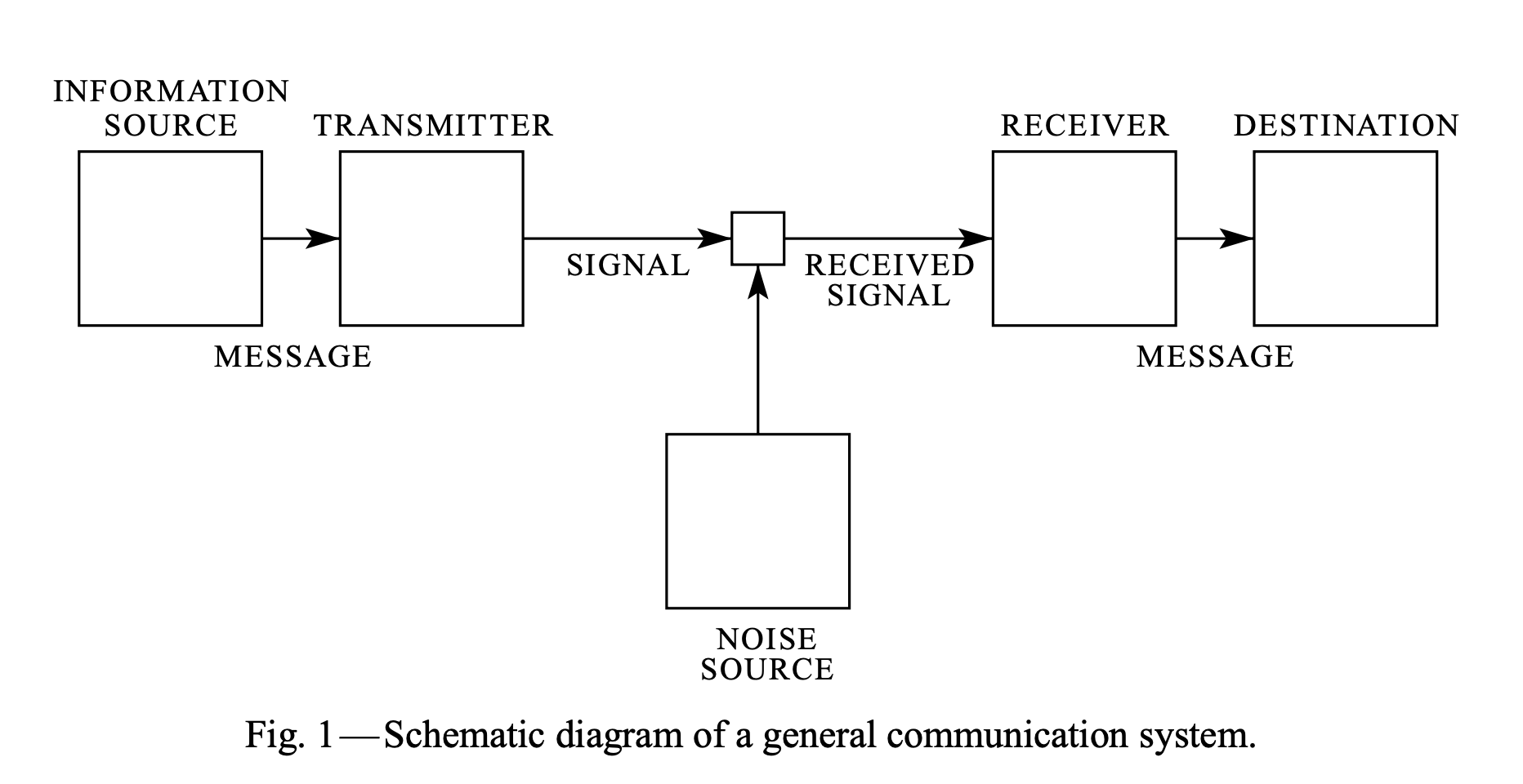

Introducing the study of the fundamental limits in communication, applied to storage and transmission, for example, we refer to Claude Shannon where in 1938 he presents a beautiful, thoughtful, and elegant schematic for information as a process, drawing clear boundaries between steps.

From an information source, a message X is transmitted via a medium, a signal is sent and then received, hopefully at the correct destination. Message X, to be transmitted, Claude thought that a binary encoding made sense, and the the bit as the smallest unit of information is born.

Information is treated with uncertainty and modeled as random variables. The transmitter and the receiver are made to send and receive either zero or one [0,1], ant the bits contain no reference to the meaning they represent.

The neighboring relationship and it’s predictive nature by association used frequently in data compression, poses an intriguing correlation with the degree of similarity between the spatio-temporal neural fluctuations of multiple people increasing by proximity. Aditionally, Neural Oscilations have also been correlated with information transfer spatially in the brain, perception and memory.

/++++

Thinking about boundaries in the agents and communication, and even information as a process dates x and highlights y and z.

/++++

DNA is a sequence of amino acids, a long and monotonous of AGTC foldings, and its information is conveyed in the combination of the 4 symbols. In this case, can we define:

The source of information

The destination

Size and properties of the set

Quantity

Quantifying

If the amount of information is tied to the probability of the event, is it logical to extrapolate that the amount of information is proportional to noise?

Then, quantifying the amount of information in DNA by same principle, genetic mutations convey more information.

Context

Known Context Dependency

Spaces set etc meaning, reductions, categorization, assembly theory

Source and Destination

Observer Dependency

If there is no observer or destination, is Information still Information? How to qualify as an observer or a destination?

Patterns

Randomness, noise, extraordinary.

Entropy == The amount of not Noise

Reconcile? The amount of information finds roots in the exceptions and disruptions to a pattern, not the pattern itself.

First Principles

Information == Symbolic Object –> Made from symbolic units –> Symbols

Symbolic Object == Class of Object?

Object –> Physical Symbolic Object –> ? ethereal ? physical Meaning –> by Design –> Intrinsic Value? Objective Value –> Fundamental Value –> Fundamental Quality + Fundamental Quantity?